PyTorch DataLoader 加载数据¶

在 DataLoader 中指定 batch_size 后,可以将输入数据划分为多个 Batch,分批输入到网络中训练。

读取 npy 数据¶

Python

X_train = np.load("./X_train.npy")

y_train = np.load("./y_train.npy")

X_test = np.load("./X_test.npy")

y_test = np.load("./y_test.npy")

print("Training samples: ", X_train.shape[0])

print("Testing samples: ", X_test.shape[0])

将数据转换为 tensor¶

Python

trainx = torch.from_numpy(np.array(X_train)).reshape(

len(X_train), 1, 9, 30

) # transform to tensor

trainy = torch.from_numpy(np.array(y_train)).reshape(

len(y_train), 1

) # label for regression

testx = torch.from_numpy(np.array(X_test)).reshape(len(X_test), 1, 9, 30)

testy = torch.from_numpy(np.array(y_test)).reshape(len(y_test), 1)

batch_size = 1000

class Factor_data(Dataset):

def __init__(self, train_x, train_y): # 默认输入的时候就已经是 tensor

self.len = len(train_x)

self.x_data = train_x

self.y_data = train_y

def __getitem__(self, index):

return self.x_data[index], self.y_data[index]

def __len__(self):

return self.len

# put into data loader

train_data = Factor_data(trainx, trainy)

train_loader = DataLoader(

dataset=train_data, batch_size=batch_size, shuffle=False

) # 不打乱数据集

test_data = Factor_data(testx, testy)

test_loader = DataLoader(

dataset=test_data, batch_size=batch_size, shuffle=False

) # 不打乱数据集

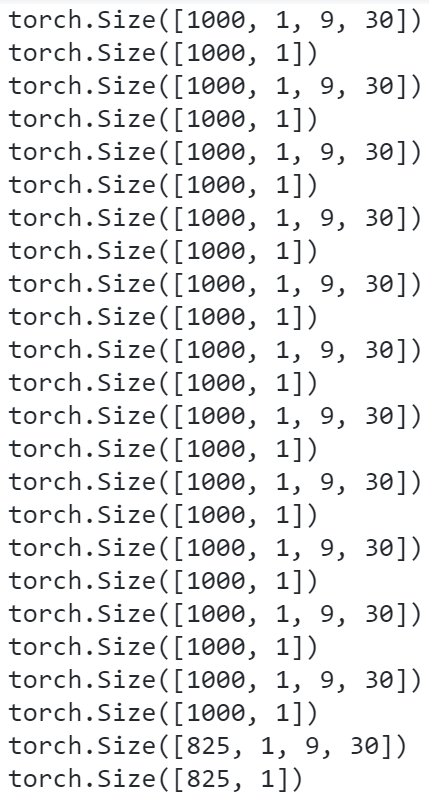

查看 train_loader 中的数据情况¶

可以看到,数据总量刚好为 11825,且前 11 个 Batch 的 Size 都是 1000(因为指定了batch_size=1000),最后一个 Batch 的 Size 是 825。