手写基于 ID3 算法的决策树模型¶

不借助现成的机器学习框架,使用 NumPy 实现基于 ID3 算法的决策树模型。

经验与心得¶

-

计算经验条件熵时,求和项里其实没有负号了,但我 之前写的笔记中误加了负号。这属于是被自己之前写的笔记坑了。正确的公式如下:

\[ H(D) = -\sum_{k=1}^{K} p(k) \log_2 p(k) \]\[ H(D|A) = \sum_{i=1}^{n}\frac{|D_i|}{|D|} H(D_i) \] -

在编写测试程序时暂时把

feature_index设为 1,在后面忘记改成通用的feature_index了。

-

纸上得来终觉浅,绝知此事要躬行。看网络上的机器学习教程、听老师上课讲算法原理和步骤,都不如自己从头实现一个简单的算法让自己印象更深刻。虽然模型还有各种缺陷,但最终得到约 90% 的预测准确率还是会让自己觉得惊喜。这一看似简单的算法,在经历了各种细节上的报错之后,我相信会对它有更深的理解。

导入必要的包¶

导入数据¶

这里使用了 UCI 提供的 汽车评估数据集,也可以在下载。它的特征和标签都是类别型的,适合用 ID3 算法实现决策树。

我们的任务是用购买价格、维修成本、载客数量等特征预测汽车评估的等级。标签一共有 4 个值,分别是:unacc、acc、good、vgood,即最差到最好。

header = ["buying", "maint", "doors", "persons", "lug_boot", "safety", "class"]

data = pd.read_csv("car.data", names=header, header=None)

## buying maint doors persons lug_boot safety class

## 0 vhigh vhigh 2 2 small low unacc

## 1 vhigh vhigh 2 2 small med unacc

## 2 vhigh vhigh 2 2 small high unacc

## 3 vhigh vhigh 2 2 med low unacc

## 4 vhigh vhigh 2 2 med med unacc

## ... ... ... ... ... ... ... ...

## 1723 low low 5more more med med good

## 1724 low low 5more more med high vgood

## 1725 low low 5more more big low unacc

## 1726 low low 5more more big med good

## 1727 low low 5more more big high vgood

##

## [1728 rows x 7 columns]

定义构建决策树所需的 class¶

定义 Node 类,用于表示树的节点¶

Node 类的属性有:

- feature_index: 此节点用于划分的特征的索引

- children: 此节点的子节点,是一个字典,键是特征的取值,值是子节点,子节点也是 Node 类

- info_gain: 此节点的信息增益

- value: 此节点的类别。如果此节点是叶子节点,则此属性有值,否则为 None

class Node:

def __init__(self, feature_index=None, children=None, info_gain=None, value=None):

"""constructor"""

# for decision node

self.feature_index = feature_index

self.children = children

self.info_gain = info_gain

# for leaf node

self.value = value

定义 Tree 类,用于表示决策树¶

Tree 类的属性有:

- root: 树的根节点,是 Node 类

- min_samples_split: 决策树的最小分裂样本数,如果样本数小于此值,则不再分裂

- max_depth: 决策树的最大深度,如果深度大于此值,则不再分裂

关键函数是:

- build_tree: 用于构建决策树

- get_best_feature: 用于找到最佳划分特征

class DecisionTreeClassifier:

def __init__(self, min_samples_split=2, max_depth=2):

"""constructor"""

# initialize the root of the tree

self.root = None

# stopping conditions

self.min_samples_split = min_samples_split

self.max_depth = max_depth

def build_tree(self, dataset, curr_depth=0):

"""recursive function to build the tree"""

X, Y = dataset[:, :-1], dataset[:, -1]

num_samples, num_features = np.shape(X)

# split until stopping conditions are met

if num_samples >= self.min_samples_split and curr_depth <= self.max_depth:

# find the best feature

best_feature = self.get_best_feature(dataset, num_features)

# check if information gain is positive

if best_feature["info_gain"] > 0:

children = []

for child in best_feature["children"]:

# 对每一个 child,迭代调用 build_tree 函数

children.append(

(child[0], self.build_tree(child[1], curr_depth + 1))

)

return Node(

best_feature["feature_index"], children, best_feature["info_gain"]

)

# compute leaf node

leaf_value = self.calculate_leaf_value(Y)

# return leaf node

return Node(value=leaf_value)

def get_best_feature(self, dataset, num_features):

"""function to find the best split"""

# dictionary to store the best split

best_feature = {}

max_info_gain = -float("inf")

# 求数据集的标签

y = dataset[:, -1]

# 求数据集的样本数

D = y.shape[0]

# 求数据集的经验熵

H_D = self.entropy(y)

# loop over all the features

for feature_index in range(num_features):

feature_values = dataset[:, feature_index]

# 获取该特征的所有去重值

feature_values = np.unique(feature_values)

# 计算该特征的经验条件熵

H_D_A = 0

for i in feature_values:

# 求该特征的值等于 i 时的标签

y_i = dataset[dataset[:, feature_index] == i][:, -1]

# 求该特征的值等于 i 时的经验熵

H_D_i = self.entropy(y_i)

# 求该特征的值等于 i 的比例

weight_i = y_i.shape[0] / D

# 求该特征的经验条件熵

H_D_A += weight_i * H_D_i

# 求该特征的信息增益

curr_info_gain = H_D - H_D_A

# update the best split if needed

if curr_info_gain > max_info_gain:

best_feature["feature_index"] = feature_index

best_feature["children"] = [

(i, dataset[dataset[:, feature_index] == i]) for i in feature_values

]

best_feature["info_gain"] = curr_info_gain

max_info_gain = curr_info_gain

return best_feature

def entropy(self, y):

"""function to compute entropy"""

class_labels = np.unique(y)

entropy = 0

for cls in class_labels:

p_cls = len(y[y == cls]) / len(y)

entropy += -p_cls * np.log2(p_cls)

return entropy

def calculate_leaf_value(self, Y):

"""function to compute leaf node"""

Y = list(Y)

return max(Y, key=Y.count)

def print_tree(self, tree=None, indent=" "):

"""function to print the tree"""

# 如果没有传入 tree 这个参数,则默认需要打印 self.root 这棵树

if not tree:

tree = self.root

# 如果是叶子节点,则打印叶子节点的值

if tree.value is not None:

print("<{}>".format(tree.value))

# 如果是决策节点,则打印决策节点的信息,包括特征名称和子节点

else:

# print('\n')

print("_{}_".format(str(columns[tree.feature_index])))

for i in tree.children:

print("{}{}:".format(indent, i[0]), end="")

self.print_tree(i[1], indent + indent)

def fit(self, X, Y):

"""function to train the tree"""

dataset = np.concatenate((X, Y), axis=1)

self.root = self.build_tree(dataset)

def predict(self, X):

"""function to predict new dataset"""

preditions = [self.make_prediction(x, self.root) for x in X]

return preditions

def make_prediction(self, x, tree):

"""function to predict a single data point"""

if tree.value != None:

return tree.value

feature_val = x[tree.feature_index]

child_index = [

idx for idx, tup in enumerate(tree.children) if tup[0] == feature_val

][0]

return self.make_prediction(x, tree.children[child_index][1])

划分数据集¶

columns = data.columns

X = data.iloc[:, :-1].values

Y = data.iloc[:, -1].values.reshape(-1, 1)

from sklearn.model_selection import train_test_split

X_train, X_test, Y_train, Y_test = train_test_split(

X, Y, test_size=0.3, random_state=41

)

## array([['low', 'vhigh', '2', '2', 'small', 'low'],

## ['low', 'low', '4', '4', 'big', 'high'],

## ['med', 'med', '2', '4', 'med', 'low'],

## ...,

## ['vhigh', 'med', '5more', 'more', 'big', 'low'],

## ['med', 'med', '2', 'more', 'big', 'low'],

## ['med', 'vhigh', '4', '4', 'med', 'med']], dtype=object)

## array([['unacc'],

## ['vgood'],

## ['unacc'],

## ...,

## ['unacc'],

## ['acc']], dtype=object)

训练决策树模型¶

classifier = DecisionTreeClassifier(min_samples_split=3, max_depth=3)

classifier.fit(X_train, Y_train)

classifier.print_tree()

## _safety_

## high:_persons_

## 2:<unacc>

## 4:_buying_

## high:_maint_

## high:<acc>

## low:<acc>

## med:<acc>

## vhigh:<unacc>

## low:_maint_

## high:<acc>

## low:<good>

## med:<good>

## vhigh:<acc>

## med:_maint_

## high:<acc>

## low:<good>

## med:<acc>

## vhigh:<acc>

## vhigh:_maint_

## high:<unacc>

## low:<acc>

## med:<acc>

## vhigh:<unacc>

## more:_buying_

## high:_maint_

## high:<acc>

## low:<acc>

## med:<acc>

## vhigh:<unacc>

## low:_maint_

## high:<vgood>

## low:<vgood>

## med:<vgood>

## vhigh:<acc>

## med:_maint_

## high:<acc>

## low:<vgood>

## med:<vgood>

## vhigh:<acc>

## vhigh:_maint_

## high:<unacc>

## low:<acc>

## med:<acc>

## vhigh:<unacc>

## low:<unacc>

## med:_persons_

## 2:<unacc>

## 4:_buying_

## high:_lug_boot_

## big:<acc>

## med:<unacc>

## small:<unacc>

## low:_maint_

## high:<acc>

## low:<good>

## med:<acc>

## vhigh:<unacc>

## med:_maint_

## high:<acc>

## low:<acc>

## med:<acc>

## vhigh:<acc>

## vhigh:_maint_

## high:<unacc>

## low:<acc>

## med:<unacc>

## vhigh:<unacc>

## more:_buying_

## high:_lug_boot_

## big:<acc>

## med:<acc>

## small:<unacc>

## low:_maint_

## high:<acc>

## low:<acc>

## med:<good>

## vhigh:<acc>

## med:_maint_

## high:<acc>

## low:<good>

## med:<acc>

## vhigh:<acc>

## vhigh:_maint_

## high:<unacc>

## low:<acc>

## med:<unacc>

## vhigh:<unacc>

若决策树太庞大,可能无法在屏幕上完全显示,可以到文本文件中查看完整决策树。具体实现方法可参见 这篇帖子。

模型评估¶

import sklearn.metrics as metrics

def evaluate(true, pred):

print("accuracy:{:.2%}".format(metrics.accuracy_score(true, pred)))

训练集上的表现¶

测试集上的表现¶

存在的缺陷¶

本文实现了基于 ID3 算法的决策树模型,但存在如下问题不能很好地解决:

-

只支持离散类型的特征变量,若特征为连续值,则不适用于 ID3 算法。

-

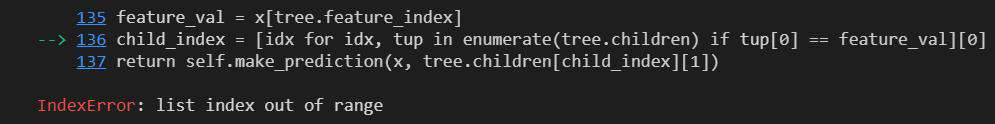

若测试集中出现了训练集中没有见过的样本,那么基于训练集生成的决策树就不知道如何分枝,导致报错。具体报错如下:

这里的报错是 children 中找不到这个特征值对应的 child tree。这本质上是因为,测试集的样本太大,而训练集的样本太小。在训练集的分枝过程中,我们能够把训练集的样本完全分类。但现在验证集的样本太大,其中有些样本是在训练集中没见过的,这时放到基于训练集得到的模型中,模型也不知道应该怎么分。

这个报错可能是由于测试集的比例太大(导致训练集中没有和测试集类似的样本),或者决策树的深度太大(训练出的决策树深度太大,到树的底部时,可分枝的特征变量的属性比较少,如果测试集刚好出现了新的属性,那么决策树就不知道如何继续分枝)。